Good practices in creating automated functional tests

- Processes, standards and quality

- Technologies

- Others

In this article I would like to summarize best practices of creating a set of automated tests, which should make them efficient, easier to maintain and expand. What is more, those practices will enable to consider automated tests as an important and useful part of process by the entire development team.

All those conclusions are results of almost a 1.5 year work on automating functional tests of web application. My team managed to apply some of best practices from the beginning of the project. Other practices were applied during development process and results of some of our mistakes were present for the whole time. Now, our goal is to avoid them in the next project.

Remember that this post is not about why we should automate our functional tests. It is also not about technical details of writing tests in any particular programming language or by using any particular framework.

Initial investigations

Before we start writing any code we should think about best possible technical solution.

The goal of this article is not to encourage using any specific framework or library. However, some crucial aspects should be considered here. First of all, we should think about our technical requirements. We have to ensure that considered framework provides demanded functionality to our project and can be used in the available environment.

The second thing is team experience. It is always difficult to start using a tool which is new for the whole development team. Moreover, technical support from a vendor and size of community, where even more advanced problem could be solved and fully described, are also important here. It should considerably reduce effort to prepare tests using this specific framework.

Also, some research on prices of specific products has to be done and this issue should be discussed with stakeholders as soon as possible.

Automated functional tests as a technical task

It is especially clear now for my whole team that test code should be reviewed in the same way as production code. In addition, writing these tests should be considered as a normal technical task during sprint. All issues connected with code quality (e.g. maintainability) are especially important if tests are meant to be efficiently expanded or easy to debug, which obviously should be team’s goal. It is crucial to be aware of that from the very beginning of the project. In some cases it may happen that after a period of time, demanded effort to refactor all those tests will be too remarkable and problems in this area will be present for the whole development.

One small comment here: of course, test code should be reviewed by a development team carefully, however approach should be pragmatic. It might happen that some solutions are much easier to make using practices which are often considered as “bad”, such as creating static methods or classes. Despite that fact, if it is done with care and consciousness and from our experience we know that such things won’t have any negative influence on our work it may be still good enough solution.

As I am writing about code quality another important thing has to be mentioned here. Common problems of inexperienced team in this area can be simply resolved by applying popular and well known patterns of building automation framework. As I wrote at the beginning, I don’t want to describe here any particular solution, technology or framework. Nevertheless, simple example of using Page Object pattern for functional tests of web application is especially meaningful in this case. Skilfully using this pattern can resolve problem of code duplication and often gives opportunity to quickly prepare new tests without need of repeating similar actions many times (like manually searching elements on website). It also makes tests much more readable.

Lack of any strict, technical approach to automated tests is a dangerous pattern. Soon, tests become extremely hard to maintain and very often adding new test can mean copying a piece of code from old one and pasting it to the new one with some small changes. Moreover, in the late phases of development, additional code refactoring can be time-consuming, so it is especially important to have clear vision of what should be done at the beginning of development to avoid such work. Of course, it should be kept in mind that not everything can be predicted and sometimes significant changes in architecture or approach to functional tests are unavoidable.

Automated functional tests as a part of the process

It is obvious that when team creates couple of functional tests they would like to run them after every solution build to ensure that everything still works properly after any changes in production code. It was something which my team applied in the project from the beginning and it seemed to work. However, there are still a couple of issues which should be taken into consideration, such as time of execution, presentation of results or “green builds”.

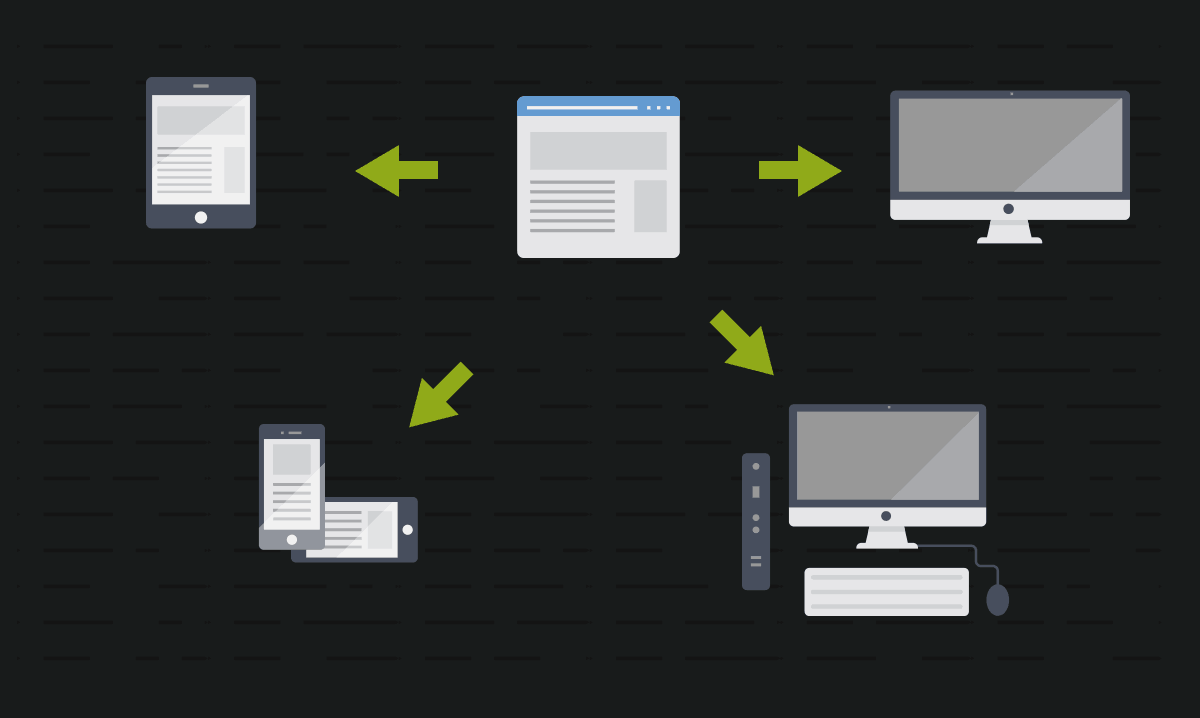

If there are only a couple of tests, which doesn’t take much time (I mean less than 10 minutes), there is no problem. However, if our set of tests becomes bigger and time of execution grows rapidly, attitude to these tests in a development team can change. Even waiting 30 minutes for tests results can be uncomfortable for a team and what if execution of all prepared tests takes a couple of hours? In such cases we should pick some primary tests, which verify basic functionalities of our application and only them should be run after every solution build on our CI as a typical smoke tests. The rest should be executed for example once a day as a part of regression tests. Of course, there are other possible solutions to this issue, like running all tests in parallel, however it demands much more resources and more time to configure the whole environment.

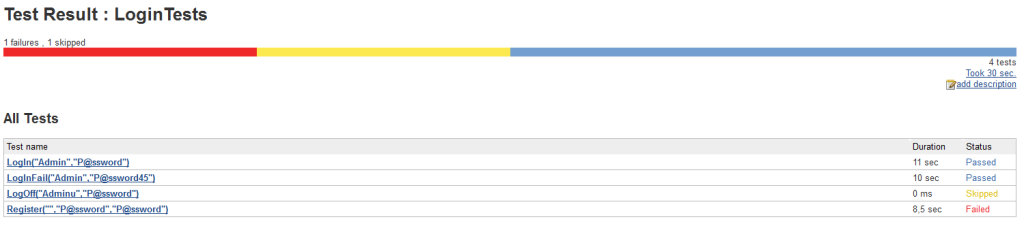

After test build is finished we should check results. We know if build passed or failed and in case of the latter we should be able to analyse results and find out what went wrong. It is something which every team member should be able to do, not only a person who wrote test or takes care of the entire tests set. If someone sees on CI some meaningless error and many unreadable lines of text, it can be difficult to find out what went wrong. Because of that, tests code should be written in such a way that all possible exceptions should be caught and logged and any assertions should be clear. Moreover, for example Jenkins has many useful plugins, which enhance readability of tests results and which could be smoothly installed on our CI server. In my last project just simple MSTest plugin made tests results much more understandable for everyone by displaying them in a user-friendly way and making it easy to find out what went wrong.

There are indeed other ways to present tests results comprehensibly but the most important thing here is to remember the main goal of that – every team member should be able to recognize problem efficiently and to solve it or at least inform a proper person of what should be fixed.

If automated tests have been implemented and are run after every build and if they’re supposed to bring any value to the project, they have to be considered as an important part of the process. What is even more important, in the case of any failure, results have to be analyzed immediately. If any of the tests constantly fail and nothing is done with it, the build is “red” , which reduces the confidence that tests are able to provide trustworthy information about the state of application. Hearing from any team member: “This test always fails, it is normal” is a killer for one of the main ideas of automated testing. If we accept that some tests fail because of “known issue”, we should be aware that we are unable to know if some additional bugs aren’t covered by this issue. What is more, the very development team can miss a fact that other tests failed. To avoid this situation keeping tests green should be a crucial task for the whole team. Some simple rules like “gated check-in”, “we do not leave red tests after a day work” or “before every daily standup we have to know what goes wrong with tests” should be accepted by the entire team.

Another, worth mentioning thing is ability to run tests locally by any team member. In many cases it will be more comfortable for the entire development process to verify everything on developer’s machine before introducing any changes to the main branch. Running tests locally should be easy to perform, so that any team member doesn’t have to “manually change anything in config files” to execute tests.

Conclusion

The main conclusion after completing a project is to treat automating tests as an important technical task during a sprint and not as an insignificant, additional work to do. Only in such case automated functional tests will bring true value to a project. Every team member should trust that tests results are reliable and be able to perform basic actions with them. Moreover, these tests should be easy to maintain and expand.