Infrastructure as Code – part 3 – Containers

- Processes, standards and quality

- Technologies

- Others

The previous article presented virtualization as an amazing tool ensuring optimal conditions for application development so that the local environment reflect the configurations of the production infrastructure.

In addition, this application is extremely useful for administrators who want to test planned changes to the configuration and correctness of provisioning processes. Virtualization works well in projects in which we have to deal with small distributed environment consisting of a few simple components, such as an application server, a database or message queue. In case of big and complex configurations it might turned out that other solutions are essential. Containers, because they are the topic of this article, is a concept known for a long time, however for the last few years it has been experiencing its second youth. It was possible due to the huge popularity of a tool called Docker, which is used to containers management.

LXC or Linux Containers

What are containers? It is a set of Kernel functions that provide security to processes in operating system. Thanks to them it is possible to completely isolate certain processes from each other. The result of this procedure is similar to virtualization, however, it doesn’t include an element essential in the classic virtualization i.e. hypervisor. By eliminating this layer we gain a significant saving of resources and that is why containers are sometimes called lightweight virtualization. The only element which they must share with each other is kernel.

Docker

This tool introduced revolutionary changes in the way we work with large, distributed applications. However, in order to explain what makes it so unique, we must first get to know Docker ecosystem, which includes the following components:

- image – an image is a source of data in a container, it includes applications with all their dependencies; it is something of an IOS image or a VMDK file

- repository – repository is a storage, in which images are stored together with the history of change; working with it is done in a manner similar to working with git version control system

- container – the easiest way is to say that a container is a running image; it contains at least one running process and the first process started acts as a controller of container condition – closing the first process, ends work of a container.

Docker Hub

It is a platform on which public and private repositories are stored; they serve as images storage for every registered user. Private repositories are payable. The element deserving special attention is public official repositories where software vendors post images of their products. Due to this solution, running an application that has such a repository is associated with downloading the image and issuing a command for starting a container.

Dockerfile

It is a simple text file containing a set of rules and commands useful in automatic image building. It enables:

- selecting an image which is a base for newly built image

- running commands modifying the content of an image

- copying files to an image

- preliminary configuring a container in which an image will be started

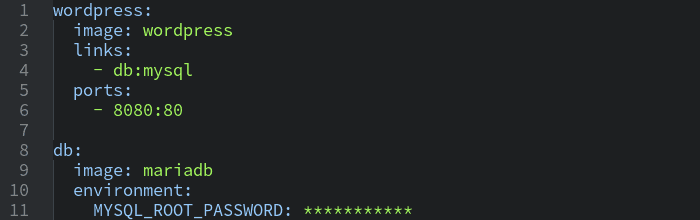

Docker Compose

It is a tool for automating application management composed of several containers. Names of images are defined in the configuration file; those images will launch containers, container parameters and the relationship between them. Thanks to docker compose starting application consisting of several containers on one machine is associated with giving one simple command docker-compose up.

Docker Swarm

Similarly to Docker Compose, we have to deal with management of an application composed of multiple containers. The difference is that they are run on many distributed machines that are running docker. We can briefly say that Docker Swarm is used to manage a cluster of machines that are running Docker. Issuing a single command, which goal is to run a container, Docker Swarm will choose the right machine from the pool. It will download the selected image and start a container passing to it all the necessary information concerning other running containers available in the pool.

Conclusion

Modern software engineering offers many interesting possibilities to solve the „it works for me” problem i.e. a situation in which the application doesn’t show any problems on a developer’s machine while started in a production environment doesn’t work correctly. First of all, we should pay attention to automation processes because manual work significantly increases probability of an error. An important element connecting a developer and production working environment, allowing to keep their compatibility, is provisioning, which is a method of automating the configuration management process and due to programming infrastructure we gain the ability to control version and its close link with the software version. In the software development process virtualization is a part that transfers environment for running applications from a physical machine to a virtual one so we gain additional layer of abstraction to ensure environment compliance. For working with large distributed infrastructure, as well as a big team, the best solution may be containers that will allow to isolate individual elements of the application into independent units that can be managed in a straightforward and extremely flexible manner. We use all these operations to get the highest possible quality of our solutions.